CatChat

Overview

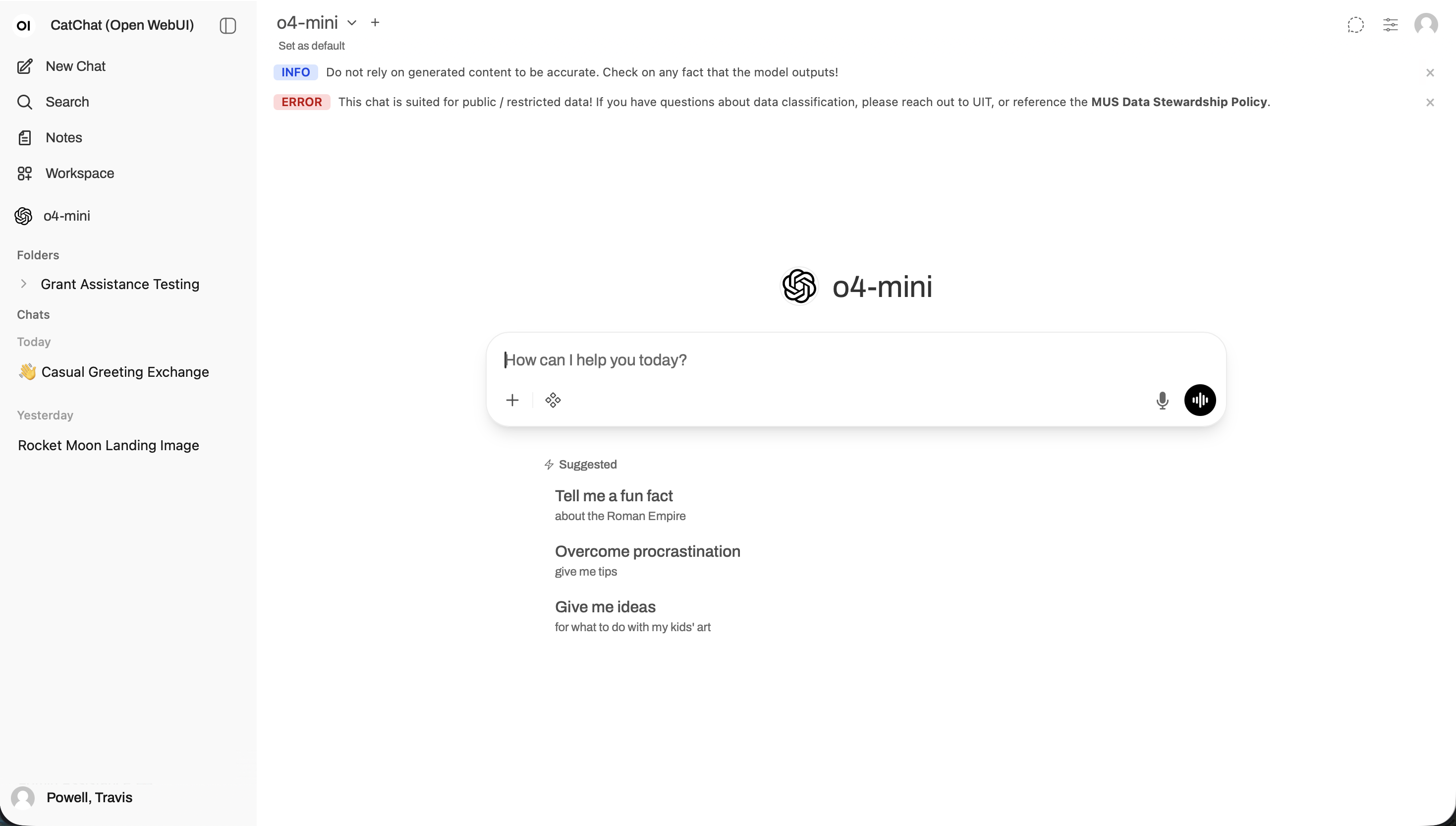

Catchat is a Generative AI (Large Language Model) portal provided by RCI (Research Computing Infrastructure). Catchat uses publicly available models such as OpenAI's gpt-oss:120b (o4-mini), mistral for programming made by mistralAI, and gemma3 which was made by google.

Catchat is free to use for all Students / Faculty / Staff at Montana State University and approved for use with data classified as “Public” (data which may be released to the general public in a controlled manner) or “Restricted” (such as FERPA protected data, financial transactions which don’t include confidential data, contracts, etc.) by the Bozeman Data Stewardship Standards.

The above approval for public and restricted data types does not imply approval for any specific use cases. It is important to follow institutional, departmental, and instructor gridlines for allowable and appropriate uses of these tools. Catchat and the models contained within are experimental and may produce inaccurate or incomplete results so output should always be verified.

Environmental Impact

Catchat is maintained by Research Computing with computational resources provided by the Tempest supercomputer which is powered by approximately 80% renewable energy and cooled with systems that do not consume water.

Getting Started

How to Use

-

Access and Security

-

Model Selection

-

Tool Usage

-

Agent Creation

-

Connect to External Providers

-

API Access

Available Models

| gpt-oss:120b ( o4-mini ) | gemma3:27b ( gemma ) | mistral-small:24b ( mistral ) | granite4:3b ( granite4 ) |

Use Cases

|

Use Case |

Requirements |

Recommended Models |

|---|---|---|

|

Advanced reasoning and multi-domain research |

Highest accuracy, long-context reasoning, strong factual recall, and cross-domain synthesis (science, engineering, policy, etc.) |

gpt-oss:120b |

|

Enterprise copilots / knowledge assistants |

Strong reasoning, safe output, high reliability, API latency tolerance |

gpt-oss:120b, gemma3:27b |

|

General-purpose chatbots and customer service |

Balanced cost–performance, multilingual fluency, context awareness |

gemma3:27b, mistral-small:24b |

|

Code generation and debugging (multi-language) |

Syntax awareness, structured reasoning, efficiency on moderate hardware |

gemma3:27b, mistral-small:24b |

|

Lightweight internal automation (summaries, tagging, extraction) |

Low latency, scalable inference, moderate reasoning depth |

mistral-small:24b, granite4:3b |

|

Fine-tuning / domain adaptation experiments |

Model that trains efficiently, flexible licensing |

mistral-small:24b, granite4:3b |

|

Creative writing, marketing copy, dialogue |

Natural tone generation, stylistic diversity, moderate context memory |

gemma3:27b, mistral-small:24b |

|

Technical document summarization |

Structured understanding, moderate token context, reliability |

gemma3:27b, gpt-oss:120b |

|

Research prototyping / evaluation of LLM benchmarks |

Open weights, transparency, large context |

gpt-oss:120b, mistral-small:24b |